Any technology can be used for good or evil purposes. And chatbots are no exception. Most chatbots are there to help you get the answer you need in the fastest possible way. Good chatbots show empathy and make sure the conversation with a visitor feels as natural as possible. But the goal is never to fool the human, which could even be illegal in some regions.

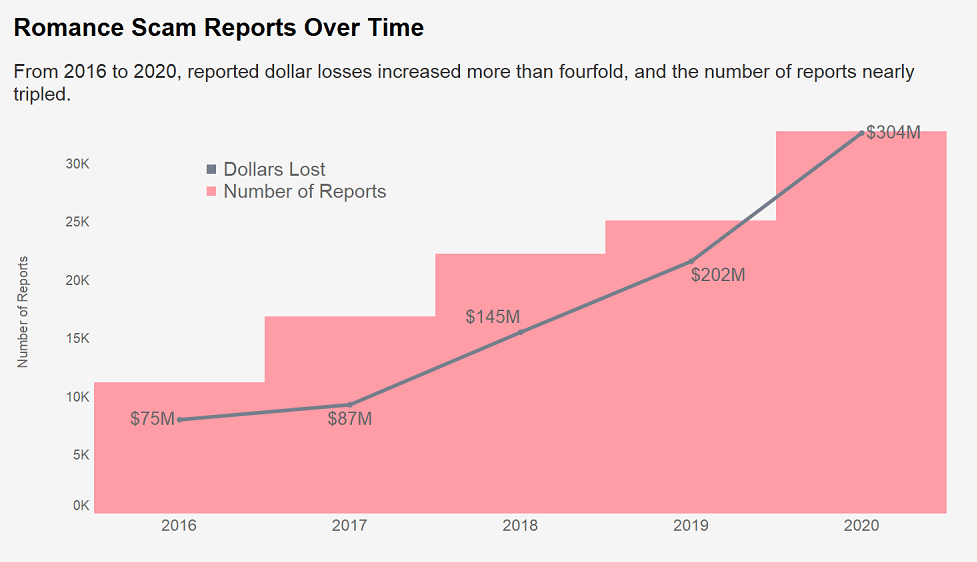

But there are always exceptions. Especially in dating apps. As shown in the featured image, in 2020, reported losses to romance scams reached a record $304 million according to this report from the US Federal Trade Commission. A 50% increase from 2019, a direct result of the confinements and pandemic that forced people to move their relationships online. In this post, I give you some hints to detect when you are talking to a malicious bot. Trust me, it’s easier than it seems!.

1. Be creative when choosing your conversation topics

General knowledge bots able to talk about almost anything are very challenging to build. This is why for many years, the turing test was considered a key measure of artificial intelligence (not really the case nowadays but this more of a philosophical discussion for another day). Some research results like Google’s Meena, Microsoft’s DialoGPT or GPT-3 are promising, but they are not generally available or not cheap enough to be used in a massive scam.

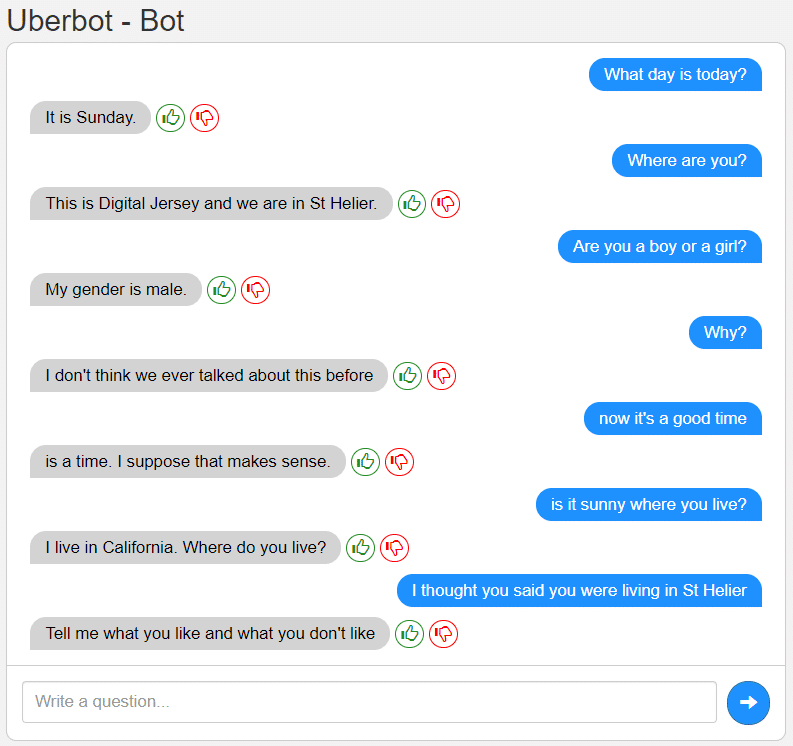

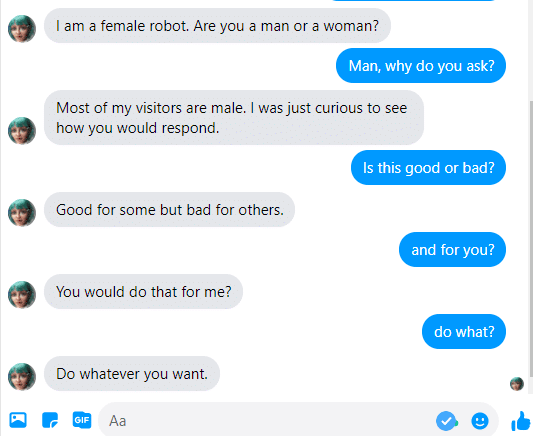

And even if they were available, it’s still rather easy to make them fell into a trap just by going out of the more common conversation paths. The following two images depict my quick conversations with two general chatbots that are available for you to play with: Kuki and UberBot. As you can see, they got lost rather quickly, even if, as Loebner Prize finalists they are also supposed to be top-notch. This just proves how difficult to make a bot able to understand anything!. That’s why the chatbots that will try to fool you are much less powerful and therefore even easier to detect.

Chatting with Uberbot

Chatting with Kuki

2. Look for repetitive patterns

Chatbots typically reply using the same answer pattern every time you ask the same question. Humans are not good at that, even if we try. Ask me twice the same thing, and I’ll provide two slightly different answers. It’s not technically difficult to add some randomness to bots responses but it does imply additional work (at the very least you need to predefine alternative answers) so most bots skip this.

3. Ask about recent events

Bots don’t read newspapers. They will not be aware of anything that happened in the world since the last time they were trained. And only very advanced bots will come with some search functionality to go search online for recent events. So ask for recent events. Even better, local ones. And have fun with the answer.

Ah, and ambiguity is also something they are really not good at. When we are faced with ambiguous expressions we use all our own social context and past experiences to try to assign the right meaning to the sentence. Bots cannot rely on that and making this knowledge explicit is another major hurdle for bot designers. Same for sarcasm.

4. Talk in any language except for English

If you live in a non-English spoken language you’re lucky. Most resources for training general bots are in English. Or in other very popular languages (Spanish, French,…). If you speak other languages, use them to talk with the bot. I guarantee you that if somebody in Tinder is able to reply to a Catalan message, it’s not a bot as Catalan chatbots are almost impossible to find. Same for many other languages.

5. Malicious chatbots don’t really want to chat

They want you to do some action that will benefit them. So, after a few sentences, they will share with you a link to continue the exchange outside the platform. For instance, with the excuse of showing you some videos or to verify some data. At that point, they will either install some type of malware or try to get some personal data out of you. They could also just try to ask for money with a variety of excuses: need to buy a phone to keep chatting with you, pay for a trip to visit you, some medical excuse,… Remember, they don’t want you, they want your money.

6. Trust the chat, not the image

Artificial Intelligence is much better at generating fake images than fake conversations. Sites like ThisPersonDoesNotExist or WhichFaseIsReal show how realistic fake images can be. And since it’s also possible to infer your age and gender from a picture, bots could even aim to generate the right photo for your profile. So, don’t get too excited for a profile photo, make sure that photo can actually talk!.

This person does not really exist

7. Common sense

Same as with any other type of relationship. If it seems too good to be true, it probably is. Chances are that you’re not so lucky to attract the interest of so many wonderful people. Especially if your success rate in the physical world is much lower. So, always ask yourself whether this is really happening or you are just being the target of a scammer. Keep in mind that scammers could be combining bots with a live chat option, especially if they are looking for a significant scam. The bots can be used to filter out all the people less likely to fall for the scam and move the best candidates to a live chat with the scammers themselves for the final convincing.

Also, keep in mind that bots do not need to check all the conditions above. There is not just one thing that will 100% determine somebody is a bot. It’s the combination of suspicious on several of the aspects listed before.

But what if I block her out and she wasn’t a bot?

Sure, it could happen. Maybe she (or he) wasn’t a bot after all. But if somebody is as simple and boring to be confused with a bot, it’s probably good that you blocked that person anyway.

Not all scammers are bots.