My goal is to go over our new NLU intent classifier engine for chatbots to show how you can use it to:

- (obviously) Create your own chatbots (pairing it up with Xatkit or any other chatbot platform for all the front-end and behaviour processing components)

- Learn about natural language processing by playing with the code and executing it with different parameter combinations

- Demystify the complexity of building a NLU engine (thanks to the myriad of wonderful open-source libraries and frameworks available) and provide you with a starting point you can use to build your own.

Ready for some NLP fun? We’ll first give some context about the project and then we’ll take a deep dive into the NLU engine code.

Contents

What is an intent classifier?

An intent or intention is a user question / request a chatbot should be able to recognize. For instance, the questions in a FAQ-like chatbot. To be able to answer the user input request (called user utterance) the bot needs to first understands what the user is asking about.

An intent classifier (also known as intent recognition, intent matching, intent detector,…) is then the function that, given a set of intents the bot can understand and an utterance from the user, returns the probability that the user is asking about a certain intent.

Nowadays, intent classifiers are typically implemented as multi-class classifier neural network.

Does the world really needs yet another NLU chatbot engine?

Probably not. In fact, in Xatkit we aim to be a chatbot orchestration platform exactly to avoid reinventing the wheel and the non-invented here syndrome. So, in most cases, other existing platform (like DialogFlow or nlp.js) will work just fine. But we have also realized that there are always some particularly tricky bots for which you really need to be able to customize your engine to the specific chatbot semantics to get the results you want.

And this is sometimes impossible (for platforms where the source code is not available, which, by the way, is the norm) or difficult (e.g. if you’re not ready to invest lots of time trying to understand how the platform works or if the platform is not created in a way to easily allow for customizations.

And of course, if your goal is to learn natural language processing techniques, there is nothing better to write some NLP code :-). Ours is simple enough to give you a kick-start.

What makes our NLU engine different?

Xatkit’s NLU Engine is (or better said, it will be, as what we’re releasing now is still an alpha version with limited functionality, which is good to play with and to learn, not so much to use it on production 😉 ) a flexible and pragmatic chatbot engine. A couple of examples of this flexible and pragmatic approach.

Xatkit lets you configure almost everything

So that the data processing, the training of the network, the behaviour of the classifier itself, etc can be configured. Every time we make a design decision we create a parameter for it so that you can choose whether to follow our strategy or not.

Xatkit creates a separate neural network for each bot context

We see bots as having different conversation contexts (e.g. as part of a bot state machine). When in a given context, only the intents that make sense in that context should be evaluated when considering possible matches.

A Xatkit bot is composed of contexts where each context may include a number of intents (see the dsl package). During the training phase, a NLP model is trained on those intents’ training sentences and attached to the context for future predictions).

Xatkit understands that a neural network is not always the ideal solution for intent matching

What if the user input text is full of words the NN has never seen before? It’s safe to assume that we can directly determine there is no matching and trigger a bot move to the a default fallback state.

Or what if the input text is a perfect literal match to one of the training sentences? Shouldn’t we assume that’s the intent to be returned with maximum confidence?

This type of pragmatic decisions are at the core of Xatkit to make it a really useful chatbot-specific intent matching project.

Show me code!

At the core of the intent classifier we have a Keras / Tensorflow model. But before training the network and using it for prediction we need to make sure we process the training data (the pairs

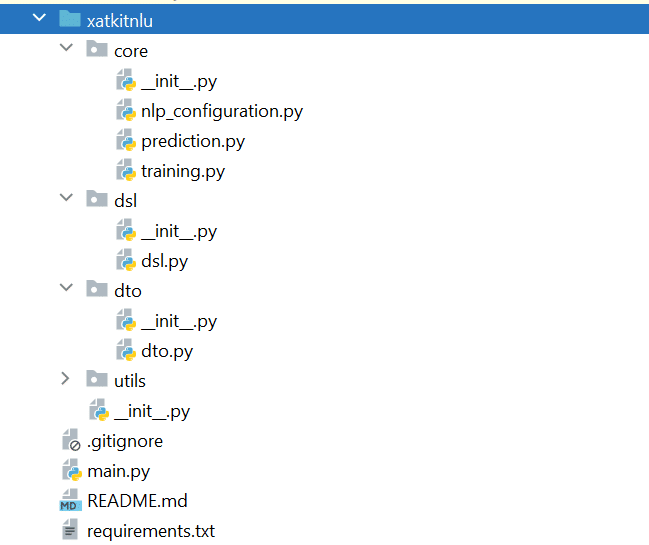

So, overall, the project structure is the following:

Main.py holds the API definition (thanks to the FastAPI framework). The dsl package has the internal data structures storing the bot definition. The dto is a simplified version of the dsl classes to facilitate the API calls. Finally, the core package includes the configuration options and the core prediction and training functions.

Core Neural Network definition

At the core of the Xatkit NLU engine we have a Keras/Tensorflow model.

The layers and parameters are rather standard for a classifier network. Two aspects worth mentioning:

- The number of classes depends on the value of

len(context.intents). We have as many classes as intents are defined in a given bot context. - We use sigmoid function in the last layer as the intents are not always mutually exclusive so we want to get the probabilities for each of them independently

Preparing the data for the training

Before training the chatbot using the above ML model, we need to prepare the data for training.

Keep in mind that bots are defined as a set of context where for each context we have a number of intents and for each intent a number of training sentences.

To get the training started, we take the training sentences and link them with their corresponding intents to create the labeled data for the bot.

Some details on the code listing above:

- We assign a numeric value to each intent and we use that number when populating the

total_labels_training_sentenceslist - We use a tokenizer to create a word index for the words in the training sentences by calling

fit_on_texts. This word index is then used (texts_to_sequencesto transform words into their index value. At this point training sentences are a set of numeric values, which we calltraining_sequences - Padding ensures that all sequences have the same length. This length, as always, is part of the NLP configuration.

- The model is finally trained by calling the

fit

Predicting the best intent matches

Once the ML model has been trained, prediction is straightforward. The only important thing to keep in mind is to process the user utterance with the same process we prepared the training data. Note that we also implement one of the optimizations discussed above to directly return a zero match prediction for sentences where all tokens have never seen before by the model.

Exposing the chatbot intent classifier as a REST API

The main.py module is in charge of exposing our FastAPI methods. As an example, this is the method for training a bot. It relies on Pydantic to facilitate the processing of the JSON input and output parameters. Parameter types are the dto version of the dsl classes.

Adding custom NER to your chatbot engine

ç

Beyond intent matching, you may also need to recognize a certain number of parameters in the input text (e.g. references to people, places, dates,…). See how to do it in our next post in this series.

Ready to give it a try?

Great. Go over to https://github.com/xatkit-bot-platform/xatkit-nlu-server and follow the installation instructions. Even better if you watch/star it to follow our new developments. And even, even better if you decide to contribute to the project in any way, shape or form you can!