Chatbots are becoming a common component of many types of software systems. But they are typically developed as a side feature using ad-hoc tools and custom integrations. We believe chatbot design should play a more central role in software development processes. Even more, we believe chatbots could be the one and only User Interface offered by those systems, becoming a fully-fledged front-end mixing textual and graphical user interactions.

The specification and implementation of the user interface (UI) of a system is a key aspect in software development. In most cases, this UI takes the form of a Graphical User Interface (GUIs) that encompasses a number of visual components [6] to offer rich interactions between the user and the system.

Popularity of GUIs has resulted in a large number of GUI definition languages (e.g. IFML [2] is the latest standard) and run-time libraries for any imaginable programming language. Most non-trivial systems adhere to some kind of model-based philosophy [1] where software design models (including GUI models) are transformed into the production code the system executes at run-time. This transformation can be (semi)automated in some cases for improved production and code quality.

Nevertheless, these generators (the simplest example would be the scaffolding and CRUD-like functionality already available in many JS frameworks) are limited to “traditional” GUIs. The increasing popularity of Conversational User Interfaces (CUI) [8] has not yet impacted current software development processes. If a CUI is required, this is seen as a separate and ad-hoc project on top of the current system development.

Indeed, CUIs and GUIs are hardly ever regarded together, except for isolated experiments [7]. We believe this hampers the potential benefits of combining the best of both worlds. This is even more relevant now that CUIs are not limited to pure text (or voice) interfaces but are also incorporating some (so far still limited) visual components until now only typical of GUIs.

In fact, we think it is fair to wonder whether it still makes sense to have two separate UIs. Could these “rich CUI” (i.e. CUIs enriched with visual UI components) completely replace current GUIs? and offer a combined experience (where users would be both able to interact graphically with the UI components or address them via voice or text-based utterances and commands)?.

A new architecture for software development with Chatbots at the core

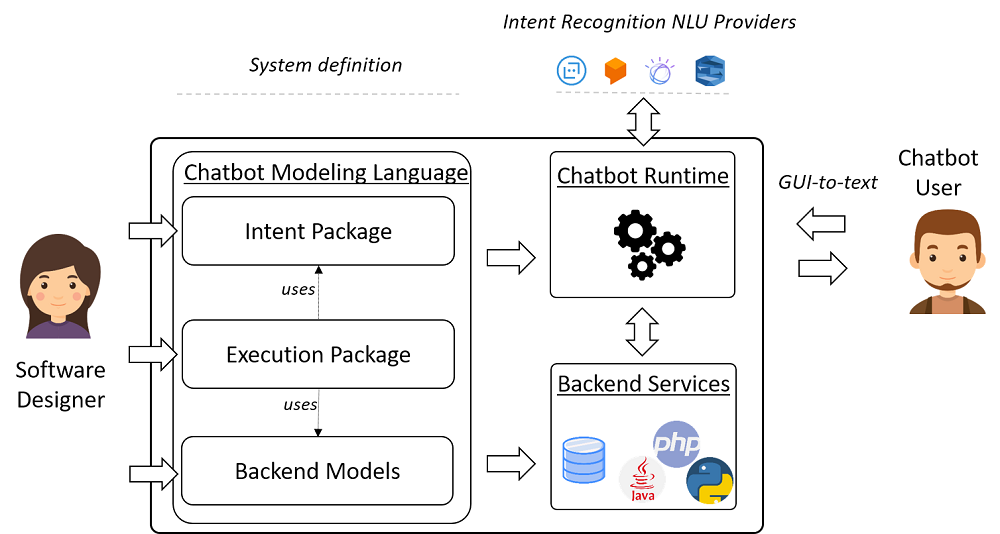

In this sense, at Xatkit, we propose a new development architecture where chatbots are first-class citizens of the development process. They are designed together with the rest of the system (the database and backend services) and deployed as the only front-end the system offers.

Our vision is summarized in the above figure. Action starts with the software designer specifying the models of both the front-end and back-end parts of the system at a platform-independent level. The difference is that now, the front-end is defined as a chatbot model. The chatbot model is composed of two different packages: the intent and the execution models.

The intent model describes the user intentions, covering both textual and graphical intents, i.e. clicking on a button or choosing an option in a group of radio buttons are defined as additional intents the chatbot will recognize. For the textual intents, we define, as usual, the training sentences and matching conditions. For the graphical intents, we list the events offered by the visual components. In any case, for every matched intent the software designer explains in the execution model how the chatbot should react by calling, if necessary, a back-end service whose behaviour has also been defined as part of the complete software specification.

From those models, the actual system implementation, including the chatbot-based front-end, is generated and deployed for its run-time execution. Matching of textual utterances is performed, as usual, by relying on a NLP engine (potentially external, e.g. Dialogflow) to classify it. Different strategies can be envisioned to process the events stemming from the visual components but for homogeneity, we propose the following one: clicks, selections and, in general, any interactions with graphical components are translated into plain text strings and matched following the same process as we do for input utterances. The generated text could be the name of the graphical element (in the case of a button) or the selected option (for radio buttons, lists,..). This guarantees a uniform treatment of all user interactions with the bot (instead of having to maintain two different matching engines) and facilitates the reuse of existing chatbot technology. Once the matched phase is done, the runtime engine reacts according to the execution model and replies back to the user either with text or by presenting any rich UI component she should use in the next interaction.

Open Challenges

We believe this vision is feasible in the short/mid-term since many of the required technological pieces already exist but are not yet glued together. Still, a few important challenges must be solved. We describe four of them, each one focusing on different aspects of the proposed architecture.

Chatbot Modeling and generation

On the one hand, a few chatbot frameworks come with Domain-Specific Languages (DSLs) to model bots at a higher abstraction level (see [4] as an example) but they only generate the chatbot layer, not the complete software infrastructure. On the other hand,low-code3tools generate the full system but mostly ignore any kind of chatbot capability or see it just as a dispensable and auxiliarycomponent4. Clearly, native integration of chatbot frameworks in current low-code tools is required. This implies adding chatbot modeling primitives to the low-code languages (very few low-code tools have some kind of chatbot support right now) and extending current code-generators to automate the creation and deployment of chatbots and their interactions of such bots with the rest of the system so that they can be used as a replacement of the traditional GUI layer generated by low-code tools so far.

Backend integrations

The whole point of a front-end is to be the interface between the user and the back-end services she needs to execute. For a chatbot front-end to be useful, the chatbot needs to be able to interact with all kinds of back-end services and not just reply using predefined and hard-coded textual responses.

As such, chatbot frameworks should, at the very least, embed native support for interacting with backend services exposed as REST APIs [5]. Other potential integrations could include GraphQL and asynchronous APIs. Custom integrations with specific customer stacks should also be possible.

A library of UI components for chatbots

In web development, there is a myriad of libraries with ready-to-use visual UI components. Initiatives like Web Components5 aim at facilitating their reusability in any specific front-end framework.

We would like to see a similar effort in the chatbots domain. Current chatbot frameworks either offer very limited components (mainly buttons) or force designers to write custom JavaScript code to build rich CUIs. These UI chatbot libraries should also be designed keeping in mind that not all chatbot deployment platforms offer the same capabilities (e.g. differences in messaging platforms).

Voice Support to interact with rich UIs

A chatbot-based front-end has the interesting side-effect to open the door to a voice interaction with the software system given that many chatbot frameworks embed some kind of predefined support for voicebots.

Still, voice assistants usually work best when the person employs full and correct sentences and/or a predefined set of known commands. Therefore, in order to successfully used voice to interact with the graphical components of the chatbot interface we would need to develop some specific commands (or “skills”) for that. This work could be built on top of the few initiatives that have explored voice commands to fill forms and other UI components [3, 9]. This line of work would also favor the accessibility of the system itself.

Final Message

Given the growing importance of chatbots in all types of software systems, we propose to make chatbot development a key concern in all software development methods and tools. Not only as a complement of the “traditional” GUIs generated by those systems but even as their complete replacement thanks to the richer conversational user interfaces that chatbots can now offer.

We believe our view is feasible and would bring significant benefits to the software and CUIs communities. We plan to advance in this direction by tackling some of the challenges described above. Also, and to avoid reinventing the wheel we would like to bring to the world of chatbot design the lessons learnt in all these years of GUI development.

Feel free to start exploring the power of chatbots as front-ends by downloading and “playing” with Xatkit, our open source flexible chatbot development platform.

REFERENCES

[1] Marco Brambilla, Jordi Cabot, and Manuel 2017. Model-Driven Software Engineering in Practice, Second Edition. Morgan & Claypool Publishers.

[2] Marco Brambilla and Piero 2014. Interaction flow modeling language: Model-driven UI engineering of web and mobile apps with IFML. Morgan Kaufmann.

[3] Kian Kok Cheung, Edwin Mit, and Chong Eng 2010. Framework for new generation web form and form filling for blind user. In 2010 International Conference on Computer Applications and Industrial Electronics. IEEE, 276–281.

[4] Gwendal Daniel, Jordi Cabot, Laurent Deruelle, and Mustapha 2020. Xatkit: A Multimodal Low-Code Chatbot Development Framework. IEEE Access 8 (2020), 15332–15346. https://doi.org/10.1109/ACCESS.2020.2966919

[5] Roy Fielding, Richard N. Taylor, Justin R. Erenkrantz, Michael M. Gorlick, Jim Whitehead, Rohit Khare, and Peyman Oreizy. 2017. Reflections on the REST architectural style and “principled design of the modern web architecture” . In Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering, ESEC/FSE 2017, Paderborn, Germany, September 4-8, 2017. 4–14.

[6] Jesse James 2010. Elements of user experience, the: user-centered design for the web and beyond. Pearson Education.

[7] Johan Levin. 2019. A chatbot-based graphical user interface for hospitalized patients: Empowering hospitalized patients with a patient-centered user experience and features for self-service in a bedside tablet. Master’s thesis. Chalmers University of Technology.

[8] Michael F. 2002. Spoken Dialogue Technology: Enabling the Conversational User Interface. ACM Comput. Surv. 34, 1 (March 2002), 90–169. https://doi.org/10.1145/505282.505285

[9] S Parthasarathy, Cyril Allauzen, and R Munkong. 2005. Robust access to large structured data using voice form-filling. In Ninth European Conference on Speech Communication and Technology.

Hi team,

Is this bot supports for connecting to databases(mostly oracle) and they can be deployed on individual servers or it’s a cloud based?

Hi Akash,

The bot can interact with external APIs and in the new version of the engine we are reimplementing Xatkit as Java Fluent API meaning that it will be easier to write any arbitrary code in response to the user questions.

You can deploy the bot in your own server